In the rapidly evolving landscape of technology, Artificial Intelligence (AI) has emerged as a double-edged sword. While it holds the potential to revolutionise sectors from healthcare to governance, it is also increasingly being misused for disinformation and propaganda. Nowhere is this more evident than in the misuse of Artificial Intelligence (AI) tools by some Pakistani users to target India, disrupt social harmony, and manipulate geopolitical narratives.

AI as a Propaganda Machine

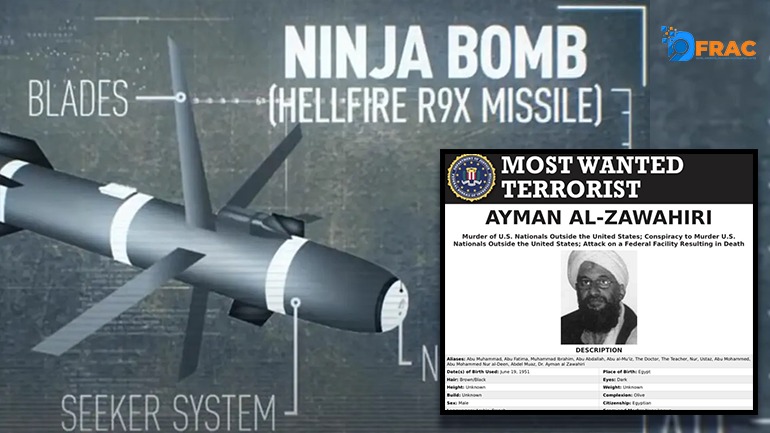

The last few years have seen a surge in AI-generated content—images, deepfakes, voice clones, and text-based misinformation—being deployed to spread anti-India narratives across digital platforms. What was once the realm of expensive state-run operations can now be executed by individuals or small networks using freely available AI tools.

From fabricated speeches to deepfake videos of Indian leaders, these synthetic media elements are designed not only to mislead but to erode public trust in institutions, inflame communal tensions, and influence elections.

Case Studies of Abuse

Deepfake Disinformation During Conflicts

During high-tension events—like the abrogation of Article 370 in Jammu & Kashmir or India-Pakistan border skirmishes—Pakistani accounts have circulated deepfake videos purporting to show Indian Army personnel abusing civilians, or Indian leaders making inflammatory statements. These videos, often indistinguishable from reality, gain traction quickly on social media before fact-checkers can intervene.

AI-Generated News Portals

Several shadowy websites, often operating under names that mimic those of Indian or international outlets, have been identified for spreading AI-generated news articles. These pieces, often created using large language models, are laced with anti-India sentiments, pushing narratives about human rights abuses, political instability, or religious intolerance, all aimed at tarnishing India’s image globally.

Fake Voices and “Audio Leaks”

Using voice cloning technology, some networks have released fake “leaked” calls or statements involving Indian politicians, journalists, or intelligence officials. These audio clips are often circulated through WhatsApp groups, Telegram channels, and Twitter to influence opinion, particularly among diaspora populations.

Coordinated Campaigns on Social Media

Many of these AI-fueled disinformation campaigns are not random. They appear to be part of coordinated influence operations often traced back to Pakistani IPs or proxy networks. Bots and fake accounts amplify content by using hashtags, manipulated images, and AI-generated comments, creating the illusion of mass consensus.

#KashmirUnderSiege, #IndiaOppressesMuslims, and similar hashtags are often trended using such AI tools to target international audiences, especially during events like G20 summits or United Nations sessions.

India’s Response and Challenges

Indian authorities, including cybercrime units and other agencies, are increasingly alert to these AI-based threats. Fact-checking networks, such as DFRAC (Digital Forensics Research and Analytics Center) and PIB Fact Check, have flagged dozens of such fake videos and articles in recent months.

However, the pace of Artificial Intelligence (AI) innovation outstrips regulation. Social media platforms are still catching up with AI detection mechanisms. Worse, generative AI platforms, while enforcing content policies, often struggle to detect regional-language deepfakes or culturally specific propaganda.

The Need for Digital Vigilance

What makes AI-driven disinformation so dangerous is its scalability and believability. In a country as large and diverse as India, even a single viral deepfake or manipulated audio clip can lead to real-world violence or diplomatic fallout.

To counter this, India needs:

AI regulation with teeth, focused on the misuse of generative content.

Bilateral cyber dialogue with nations to track cross-border digital threats.

Public awareness campaigns to educate users on how to identify fake content.

Collaboration between tech companies and law enforcement to flag and remove malicious AI-generated content quickly.

Artificial Intelligence is not inherently dangerous—but in the wrong hands, it becomes a potent weapon. The misuse of AI by Pakistani entities targeting India is not just a cybersecurity issue—it’s a threat to national integrity, public trust, and regional peace.

As India strides into a tech-driven future, it must do so with its eyes wide open to the threats posed by the weaponisation of Artificial Intelligence (AI)—and the resolve to counter it with innovation, diplomacy, and vigilance.

(The Author holds a PhD in Artificial Intelligence. Besides working on Information Warfare, he is also an advocate for Sufism and Indian Islamic cultural heritage. He can be reached at shujaatquadri@gmail.com)