Executive Summary

- The investigation uncovers a global cryptocurrency scam using deepfake technology and fake news sites to impersonate celebrities and promote fraudulent investment platforms.

- AI-generated videos and fabricated interviews of celebrities like Amitabh Bachchan, Neha Kakkar, and Mandira Bedi were used to mislead audiences.

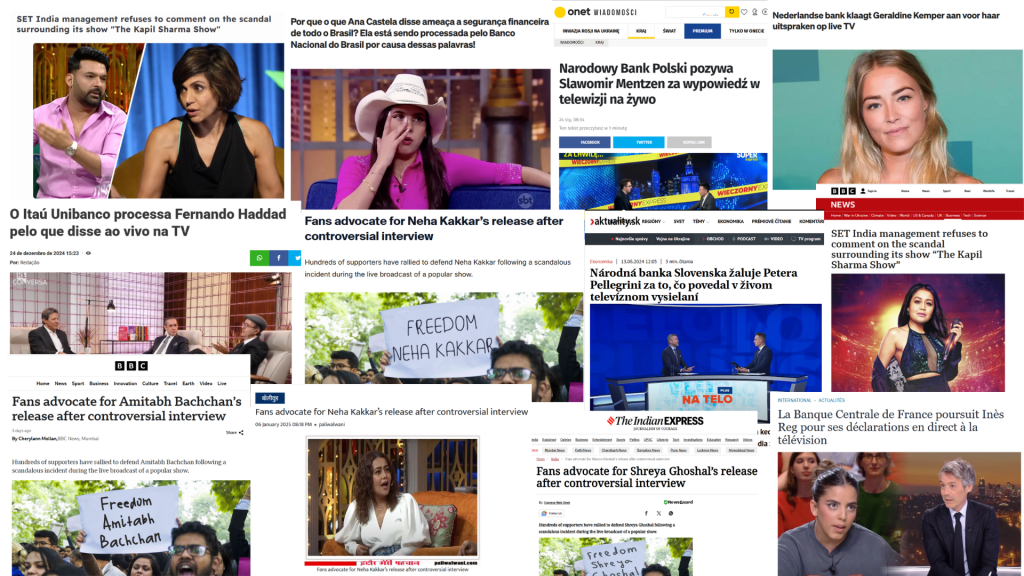

- Scammers cloned layouts of trusted news brands (e.g., BBC, Indian Express) to create fake articles endorsing scam platforms.

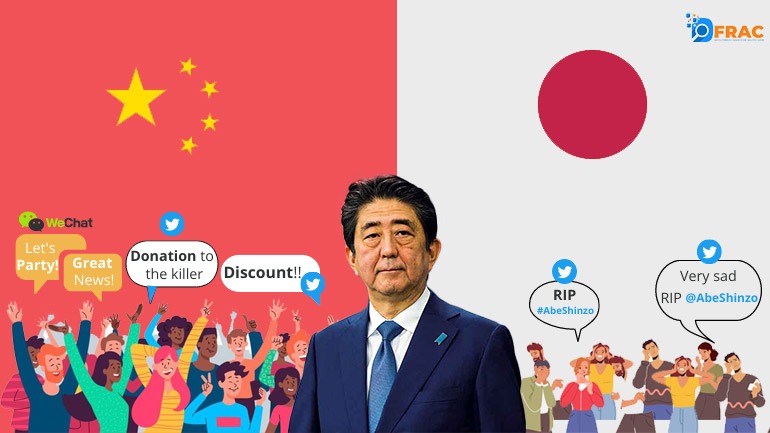

- The scam spread across South Asia, Europe, and Latin America, using multiple languages (Spanish, Turkish, Portuguese, French, etc.).

- Applied to map the actors, tactics, techniques, and target audiences involved in the disinformation ecosystem.

When Amitabh Bachchan wakes up a crypto millionaire overnight—except he never invested a rupee—that’s not Bollywood magic, it’s a deep‑fake crypto scam.

From India’s megastar Amitabh Bachchan to international figures like Elon Musk and Oprah Winfrey, high-profile identities are being hijacked to create convincing narratives of overnight crypto success. These stories, often hosted on websites designed to mimic legitimate media outlets, drive users to dubious platforms promising quick riches. What appears to be a financial opportunity is, in reality, a carefully constructed trap that exploits public trust in both celebrities and news institutions.

Our investigation combines open-source intelligence (OSINT), semantic similarity analysis, AI-content detection, and Meta Ad Library data to unravel the inner workings of this campaign. By applying the DISARM Framework—a structured approach to analyzing disinformation threats—we map the tactics, techniques, and procedures (TTPs) employed in these scams. This report aims not only to expose the deceptive ecosystem but also to underscore the urgent need for regulatory action, platform accountability, and public media literacy in the fight against AI-fueled disinformation.

Investigation & Methodology

The investigation began with the observation of a surge in deepfake advertisements featuring Indian celebrities across online platforms. These deceptive ads—prominently showcasing figures like Amitabh Bachchan and other public icons—were widely circulated on social media, drawing our attention to a potentially large-scale scam.

We systematically monitored and documented 320 such advertisements across Twitter (X) and Meta platforms during the months of February, March, and April 2025. Notably, the presence of similar content was traced back to as early as 2023, signaling an ongoing, evolving disinformation campaign.

To investigate further, we engaged directly with these ads—clicking on the links to study their landing pages and URL structures. Each ad redirected to a website mimicking the visual identity of globally trusted news organizations, including BBC, The Indian Express, and Le Monde. These counterfeit pages were designed with meticulous detail, replicating the fonts, layouts, and logos of the original outlets to maximize believability.

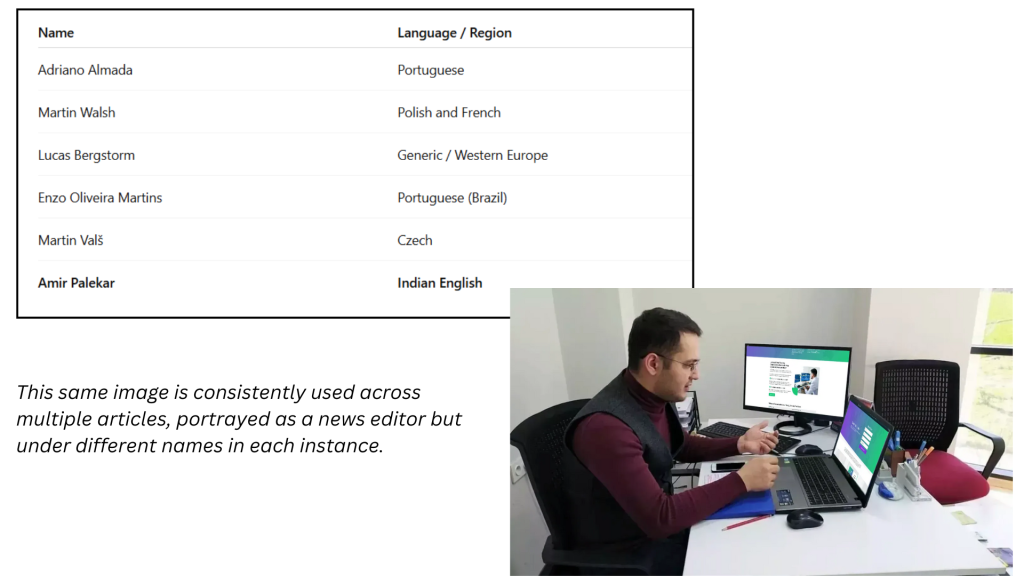

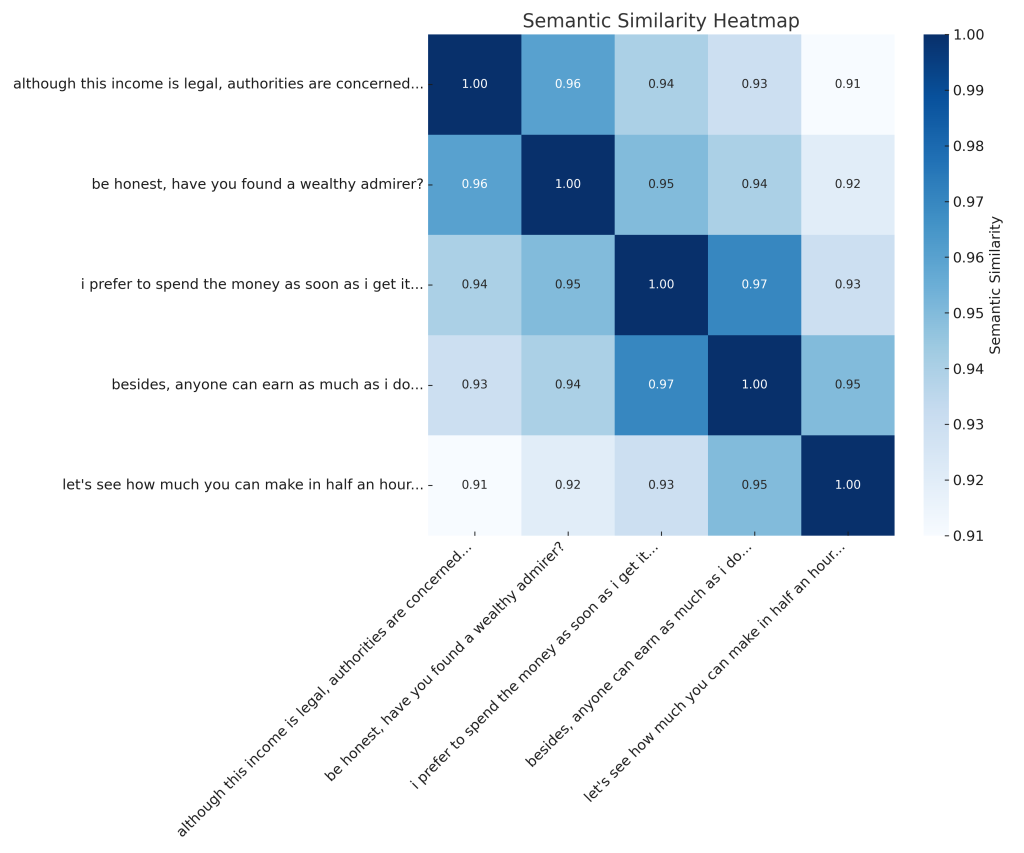

Upon scrolling through the articles, we encountered fabricated interviews with celebrities, presenting a narrative in which they supposedly invested in a crypto platform and amassed immense wealth. These articles often included an image of a man seated in a workspace environment, falsely labeled as the news editor of the outlet, who also claimed to have personally profited from the platform. To bolster the illusion of authenticity, fake user comments were embedded below the articles, praising the scheme and endorsing its legitimacy.

Using a forensic approach, we reverse-image searched for the alleged editor’s photograph. This led to the discovery that the same image had been used across more than 50 websites in various global languages, including Portuguese, Spanish, Brazilian Portuguese, Ukrainian, English (South Asian), and French. Despite linguistic differences, each website replicated the same scam structure and narrative.

Further analysis of the URLs and website domains revealed additional red flags. Many of these domains were either newly registered (often within 2–3 days of our discovery) or no older than one year, with multiple flagged as harmful or suspicious by threat detection systems. This pattern indicated a coordinated strategy of launching short-lived scam websites with rapid deployment cycles across different regions and languages to evade detection.

Fabricated News Editor Identity Across Languages

A striking element of this disinformation operation was the recurring use of a single male image, presented as the “news editor” across numerous cloned websites. Through reverse image search, we discovered that this identical photo was repurposed with different names and roles in various languages and regional sites, further illustrating the scale and coordination of the scam.

The aliases used for this fake editor include:

In addition, we observed a repetitive pattern in the article comments, with identical wording and phrases being copied across multiple posts.

Key findings from our dataset include:

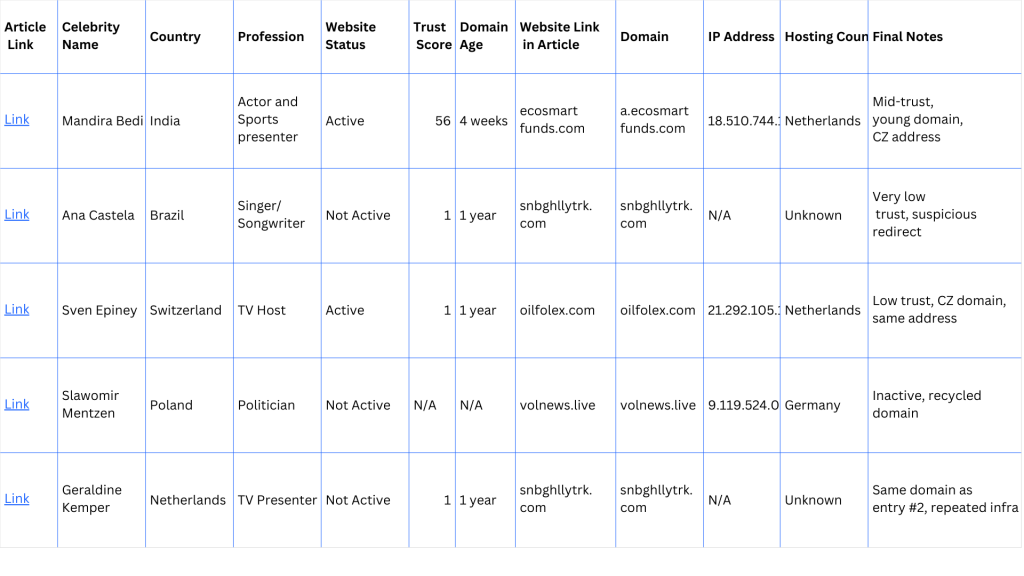

Each article includes a redirect link to a domain (e.g., “ecosmartfunds.com”, “snbghllytrk.com”) that is analyzed for trust score, domain age, and IP geolocation:

- The trust scores are dangerously low (1/100) for most domains, with only one (ecosmartfunds.com) scoring moderately (56/100).

- Domain ages are very recent (mostly around 1 year or a few weeks), which is typical of scam networks that rotate sites frequently.

- Repeated addresses (like “Jaurisova 515/4 Praha”) and IPs in the Netherlands and Germany suggest hosting through known anonymous or offshore services.

- Suspicious redirect domains like “snbghllytrk.com” appear multiple times, hinting at a shared infrastructure behind the scams.

- Inactive or placeholder websites, along with hidden WHOIS details, support the conclusion that these sites are not legitimate.

Overall, the data shows a pattern of AI-generated or fake content using low-trust, newly registered domains, often targeting public figures to gain credibility. These are likely part of a coordinated misinformation or phishing campaign.

Use of Cheap Fakes

We analyzed the images used in the ads through AI-based image detection tools, which revealed that most visuals were heavily edited, low-quality deepfakes of celebrities. These manipulated images are intentionally designed to provoke curiosity and urgency, increasing the likelihood that viewers will click on the ad.

AI Content Analysis & Domain Intelligence

To understand the scale and tactics used in this disinformation campaign, we conducted a detailed forensic and AI-based analysis of the fraudulent websites, visuals, and hosting infrastructure.

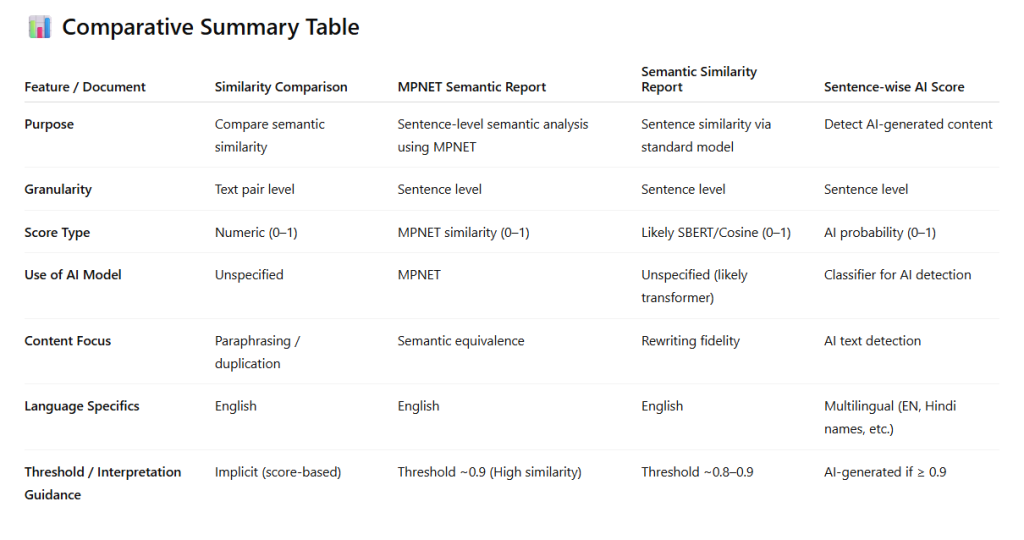

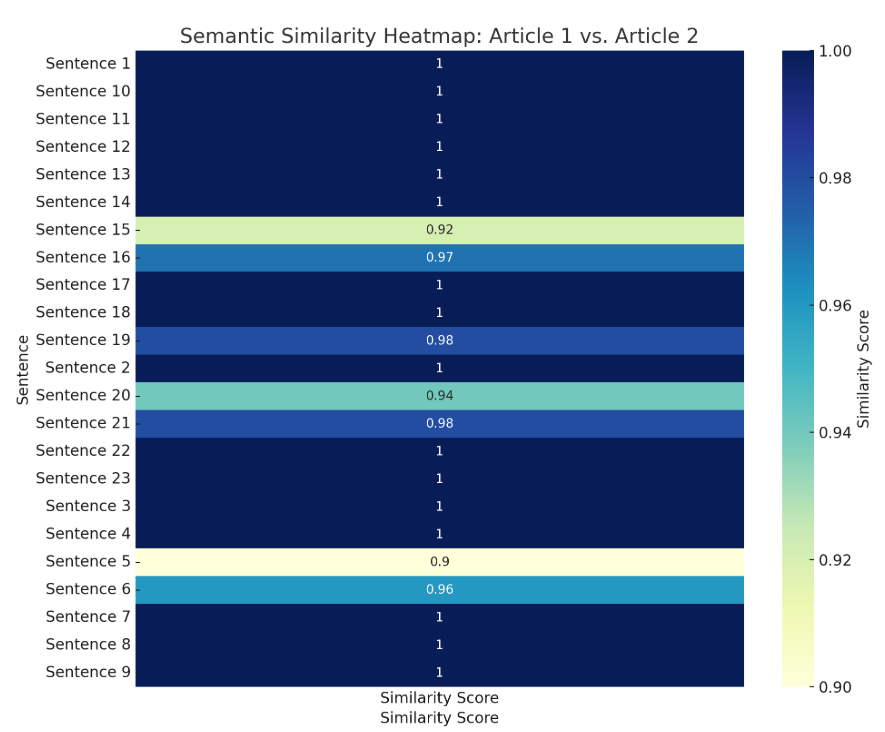

MPNET Semantic Similarity Report

We conducted sentence-by-sentence comparison between two articles using the MPNET model, showing each sentence from Article 1, the corresponding sentence from Article 2, and a similarity score (ranging from 0.6 to 1.0). Most sentences score 1.00, showing that both articles use almost identical phrasing and structure. This means the document is full of actual matched sentence pairs — it’s real-world data showing that the two articles are semantically the same.

Sentence-wise AI score

We analysed AI probability scores for each sentence across several articles (labeled Article 1 to Article 5). For every sentence, it lists the sentence text and the AI-generated probability score, usually ranging from 0.90 to 0.99, meaning it believes these are AI-generated. The content includes clickbait-style news or promotional articles involving celebrities (e.g., Amitabh Bachchan, Kapil Sharma), with flashy or emotional phrases. The report is data-heavy, listing dozens of sentences judged to be AI-written, which can be viewed here.

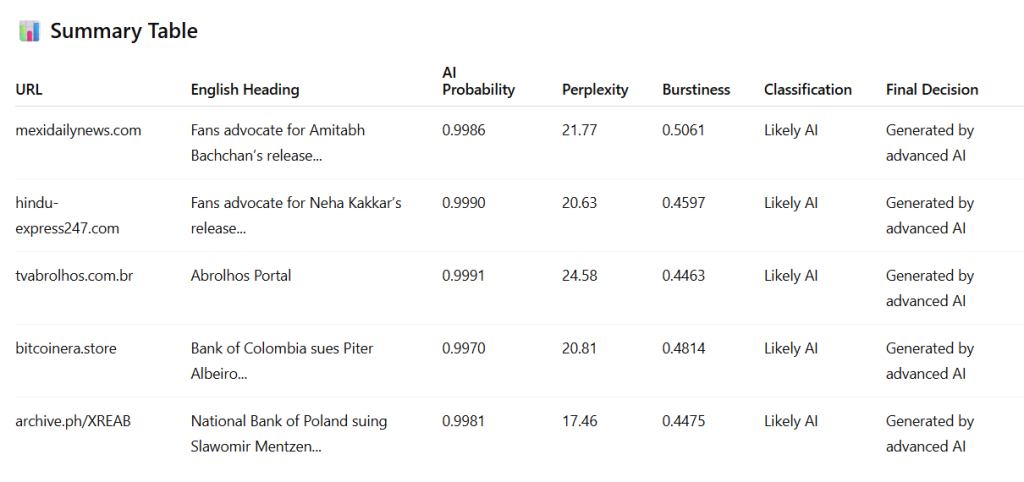

AI Probability, Perplexity, and Burstiness

We evaluated the authenticity of online articles from various websites by analyzing key AI-detection metrics: AI Probability, Perplexity, and Burstiness. In our analysis, we used pre-trained models to detect whether a text was AI-generated or human-written. Since these models are not 100% accurate, we also manually reviewed the articles using AI tools like ChatGPT and Grammarly. Based on these evaluations, we reached our final conclusions.

Additionally, we calculated perplexity for each article. In AI text detection, perplexity measures how unpredictable a piece of text is. Human-written text usually has higher perplexity, while AI-generated text tends to be more predictable and has lower perplexity.

We also measured burstiness, which refers to variations in sentence length and complexity. Human writing naturally includes a mix of long and short sentences, different levels of complexity, and diverse word choices. In contrast, AI-generated text tends to be more uniform, with consistent sentence structures and patterns.

By analyzing these parameters along with manual analysis, we arrived at our final decisions.

Meta Ad Analysis (India-Focused)

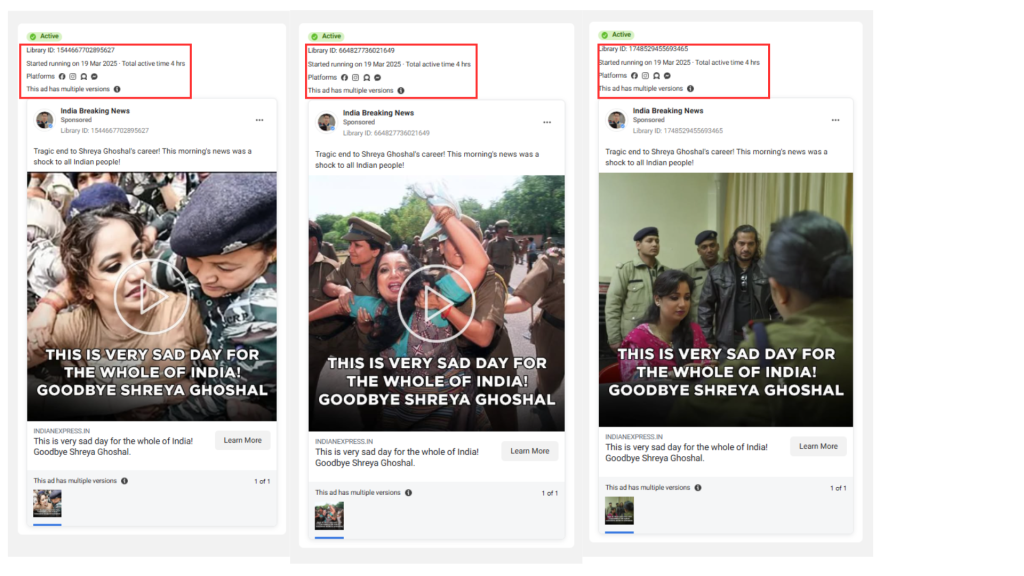

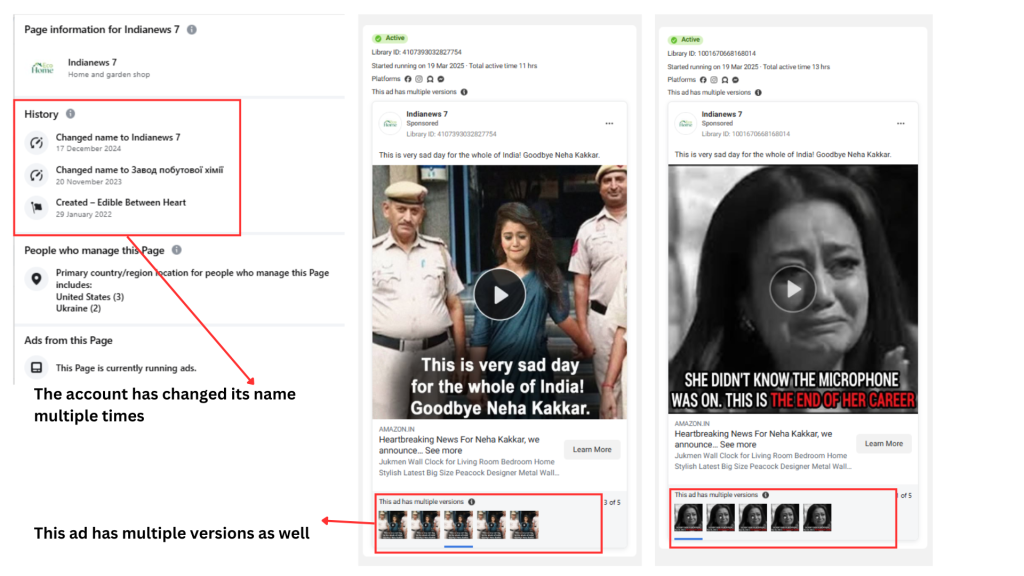

Our investigation uncovered over 200 advertisements featuring Indian celebrities actively running on Meta platforms. These ads typically run for 9–10 days with a significant ad spend of approximately $1,200 per campaign. Most of the creatives used in these campaigns were low-quality deepfakes, including morphed visuals and misleading voiceovers designed to deceive audiences.

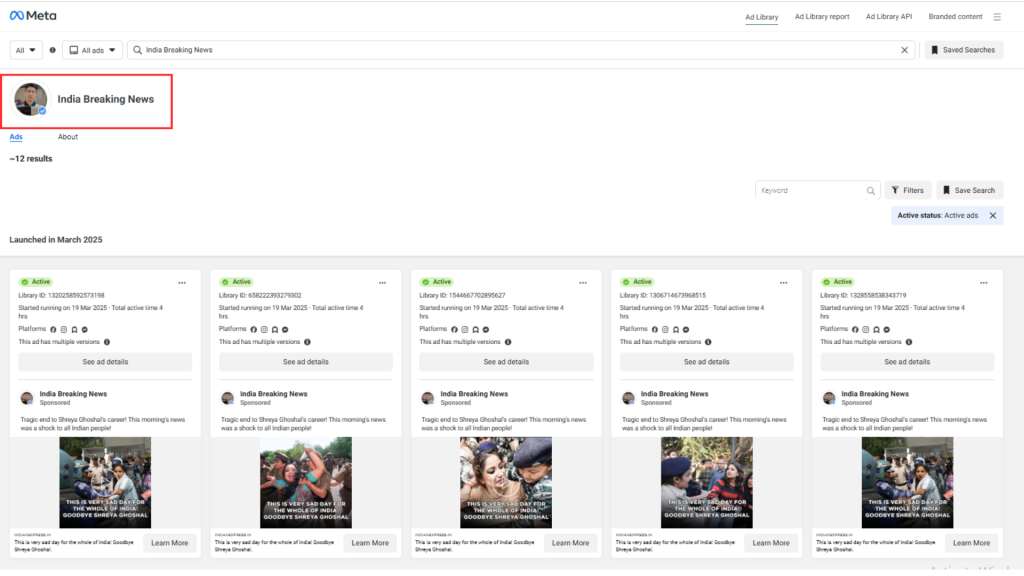

Upon examining the advertiser profiles, we identified recurring patterns. Few prominent accounts—‘Indianews1’, ‘India Breaking News’ and ‘Indianews2’—were frequently linked to these promotions. These pages have a history of name changes, function solely as promotional fronts, and appear to be operated from locations outside India, despite targeting Indian users.

In a notable example, between April 1 and April 21, 2025, we tracked 87 ads impersonating a single Indian celebrity, highlighting the scale and intensity of the campaign.

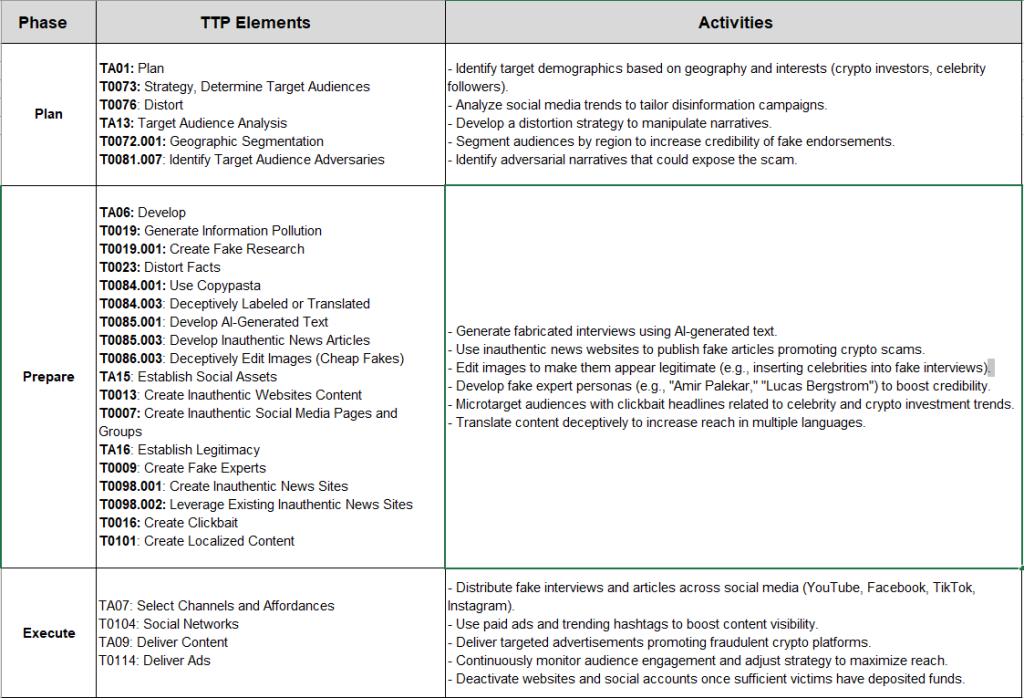

DISARM Framework: Mapping the Disinformation Ecosystem

To systematically decode the mechanics of this AI-driven crypto scam, we developed and applied a tailored DISARM Framework—a structured model to analyze disinformation operations. The framework helped us trace the lifecycle of the campaign, map the threat actors, understand their tactics, and identify targeted audiences.

The observed scam operation follows a clear disinformation lifecycle that aligns with the DISARM framework. It begins with detecting suspicious patterns like AI-generated content, fake celebrity endorsements, and low-trust websites. Investigation then uncovers shared infrastructure, fabricated personas, and manipulated media. To stop the campaign, platforms can take down fake websites, block malicious ads, and suspend accounts. The assessment phase involves analyzing how far the content has spread and which demographics were targeted. Response includes public awareness campaigns, fact-checks, and collaboration with media to counter false narratives. Finally, mitigation focuses on long-term deterrence through exposure of tactics and advocating for stricter platform policies.

Case Studies

1. Amitabh Bachchan (India)

- Ad Preview: “Exclusive Interview: Amitabh Bachchan’s Shock Crypto Confession.”

- Landing Page: Cloned “Indian Express” layout; URL “indianexpress.news”.

- Fake Interview: AI Q&A praising “FastX” platform; quoted ROI of 300% in 24 hrs.

- Editor Testimonial: “Amir Palekar” screenshot of INR 21,000 deposit credited.

- Outcome: Site vanished after 4 days; victims reported average losses of INR 35,000.

2. Portuguese Pop Star (Europe)

- Similar Workflow: Clone of “Correio da Manhã”; persona “Lucas Bergstrom.”

- Metrics: €800 median loss; domain active for 3 days; ad spend €900.

Conclusion

Our investigation reveals a vast and troubling scam network that’s taking advantage of people’s trust in celebrities and well-known news platforms. Using deepfake videos, AI-generated content, and fake websites that look like real news portals, scammers are tricking users around the world into falling for fraudulent investment schemes. These scams aren’t just random—they’re carefully planned operations, using smart technology and psychological manipulation to target people on a global scale.

Through our DISARM Framework, we were able to trace the players, strategies, and digital tools used to run this operation. From fake editor profiles to ad accounts spending thousands of dollars, the scam shows clear signs of being professionally run, with one goal: to mislead and make money.

What this report makes clear is that fighting such scams needs to be a shared responsibility. Tech platforms must act faster, regulators need to take stronger steps, and the public must be better informed about how these scams work. With AI technology advancing rapidly, the tools for deception are becoming easier to access—making awareness, collaboration, and transparency more important than ever.

Note: The findings in this report are based on a combination of open-source intelligence (OSINT), platform transparency tools like Meta’s Ad Library, reverse image search techniques, and AI content analysis. Between February and April 2025, we examined over 80 scam websites, tracked more than 200 advertisements across multiple countries, and analyzed ad account behavior, hosting patterns, and fake personas. All data was gathered from publicly available sources and AI-based detection tools, ensuring transparency and reliability in the research process.