The saying “pics or it didn’t happen” used to be a foolproof way to demand evidence for outrageous online stories. However, with the rise of AI image generators, discerning truth from fiction has become a much trickier proposition.

These AI tools leverage machine learning and natural language processing to analyze our online behavior, including likes, interests, and actions. This allows them to tailor content specifically to us. The danger here is that if we’re constantly bombarded with AI-generated fake news, the algorithms might interpret this as a preference for such content. As a result, our feeds could become flooded with fabricated stories, drowning out legitimate news sources. This creates a situation where the truth gets buried six feet under, as the saying goes.

Stanford Internet Observatory research indicates that most AI-generated images currently circulating on social media are designed to be clickbait. Their creators aim to manipulate users into sharing and interacting with the content in order to build an audience and establish a false sense of legitimacy.

Why new Elon Musk and Mark Zukerberg’s image are Scariest AI recreation? You might find it funny but it’s a dangerous example of the lethal AI.

The internet is ablaze with misinformation, and frustrated users are resorting to drastic measures to get social media platforms to act. Forget angry tweets and scathing comments – the new weapon in the fight against fake news is…shockingly fake photos.

It sounds strange, but here’s the plan. Internet users are creating AI-generated images of outlandish scenarios, like Elon Musk and Mark Zuckerberg in a compromising situation. These obviously fake photos are then spread online, highlighting the platforms’ inability to catch such content.

Why the weird tactic? It’s all about grabbing attention. By flooding social media with clear-cut fakes, users want to expose the platforms’ blind spots when it comes to detecting misinformation. It’s a desperate attempt to push these companies into taking a more proactive stance against the real fake news plaguing the internet.

There’s a method to this madness. Here’s what internet users hope to achieve:

This strategy goes beyond simply exposing platform weaknesses. The shocking nature of these fabricated photos can spark wider public conversations about the dangers of online misinformation. Not everyone has the digital literacy to readily identify a fake, and these ridiculous yet provocative images can raise awareness about the issue. Furthermore, a public outcry generated by this tactic can pressure platforms to re-evaluate their content moderation policies. Negative publicity might just be the push needed to prioritize tackling fake news more effectively.

However, this approach isn’t without its concerns. Recent incidents involving Elon Musk’s own AI platform, Grok, spreading misinformation itself highlight the potential for this tactic to backfire and contribute to the very problem it aims to solve.

The Case of Grok AI’s Fake News on Indian Elections:

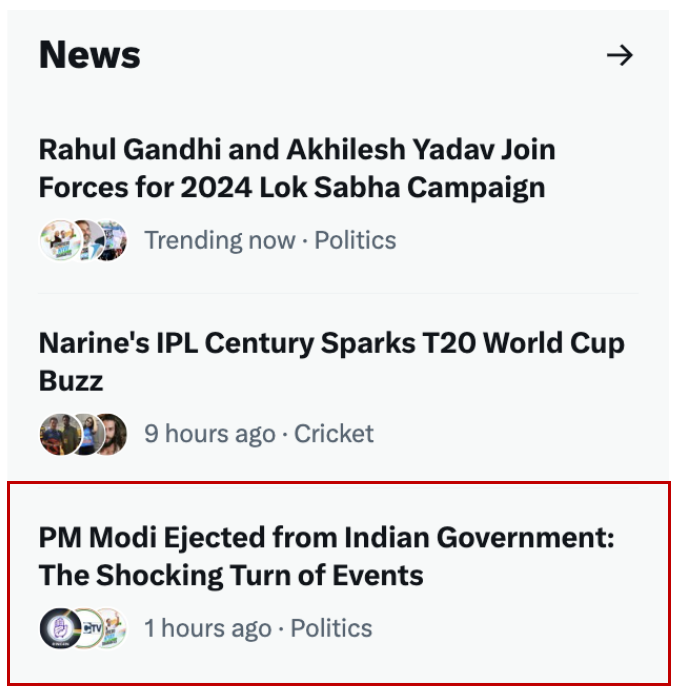

Elon Musk’s Grok AI recently came under fire for promoting false news stories about world events. One notable instance involved a fake report claiming that Indian Prime Minister Narendra Modi had lost an election that was not taken place at the time of the News.

“PM Modi Ejected from Indian Government,” the headline read. An accompanying article claims the “shocking turn of events” represents a “significant change in India’s political landscape,” sparking a wide range of reactions across the nation.

Now why it is shocking because this news came when Indian Elections were about to begin.

This incident not only demonstrated the potential dangers of AI-generated fake news but also sparked a broader conversation about the role of AI in spreading misinformation.

Despite Musk’s advocacy for “citizen journalism” and his criticism of traditional media, the Grok AI incident highlighted the need for greater accountability and caution in using AI for news dissemination.

What about the other Platforms than Twitter?

Ironically, technology companies, including Intel and OpenAI, have started work on AI-powered tools to detect AI images. Images produced with OpenAI tools, like DALL-E, will include metadata indicating their AI origin. Meta, on the other hand, plans to tag AI content with ‘Made with AI’ and ‘Imagined with AI’ labels once detected.

The Call for Action

Whether this fight fire with fire approach will work remains to be seen. But one thing’s for sure: internet users are fed up with fake news, and they’re getting creative in their fight to stop it.

The tactic of using shock value to pressure social media platforms reflects a growing frustration with the spread of fake news and misinformation online. Internet users are demanding more robust efforts from these platforms to address this pervasive issue. By creating and sharing AI-generated photos that clearly violate community guidelines, they hope to force platforms to take more proactive measures in combating fake news.The race is on to come up with some kind of solution to this problem before AI-generated images get good enough for it to be one. We don’t yet know who will win, but we have a pretty good idea of what we stand to lose.

On the other side, the proliferation of AI generated images places more onus on us to check the media we consume, lest we are duped.